⚡ Quick News

- AI Firms Urged to Calculate Superintelligence Risk Max Tegmark from MIT suggests companies assess the escape risk of ASI similarly to early nuclear safety measures. His proposal of a "Compton constant" aims to foster global collaboration for AI safety.

- Investors Fear AI Threat to Alphabet’s Search Business Alphabet's shares dropped nearly 7% after Apple's Eddy Cue mentioned AI in Safari, affecting reported searches. Concerns mount over AI tools like ChatGPT challenging Google's market lead.

- Gemma AI Tops 150M Downloads Google's AI models have reached over 150 million downloads, marking a significant achievement. However, they still trail Meta's Llama, which boasts over 1.2 billion downloads.

- SoftBank Endorses OpenAI’s Public Benefit Corporation Plan SoftBank has expressed support for OpenAI's transition to a public benefit corporation. The move could align OpenAI’s business strategy with its broader ethical mission.

OpenAI's Stargate project, a $500 billion initiative aimed at enhancing AI infrastructure across the U.S., may encounter significant setbacks due to market instability and external economic pressures. Originally partnered with Japanese tech leader Softbank in January, the implementation of Stargate is now threatened by rising tariffs and investor wariness. Specifically, tariffs imposed on crucial components like server racks and AI chips could elevate construction costs by 5–15%, causing apprehension among investors. Moreover, market hesitancy is exacerbated by the availability of cost-effective alternatives, such as China’s DeepSeek R1.

Key Highlights:

Key Highlights:

- OpenAI had plans to secure $500 billion through multiple investors including JPMorgan and Brookfield Asset Management, none of which have firmed up yet.

- Increased costs due to tariffs on essential AI components are a primary concern.

- Cheaper AI models like DeepSeek R1 are becoming competitive alternatives.

- Major tech companies, including Microsoft and Amazon, are pulling back from expansive data center projects.

If you're enjoying Nerdic Download, please forward this article to a colleague. It helps us keep this content free.

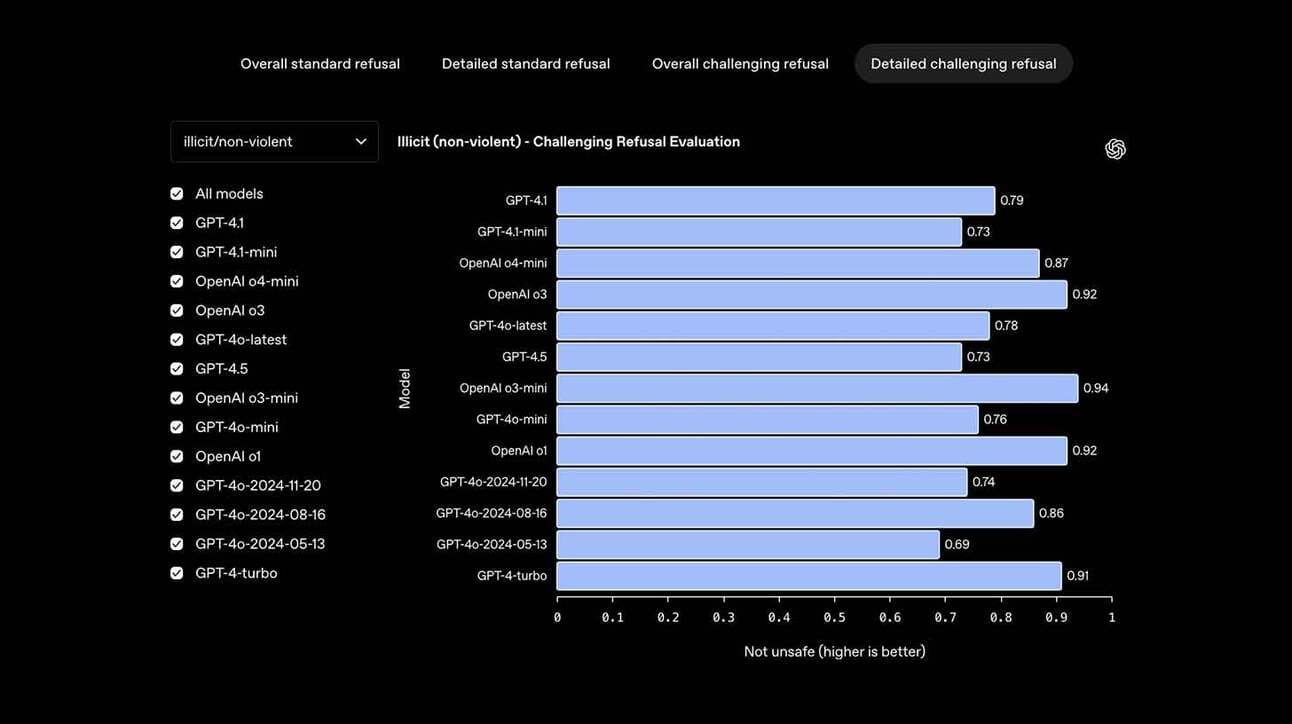

OpenAI has introduced the Safety Evaluations Hub, a breakthrough in the transparency and accountability of AI model testing. Designed to publicly showcase performance metrics, this hub evaluates variables such as harmful content generation, hallucination rates, and the susceptibility to jailbreak attempts. This initiative aims to bolster public trust by regularly updating and displaying model performance results, underscoring OpenAI's commitment to safety after facing recent criticisms and internal resignations over safety concerns.

Key Highlights:

Key Highlights:

- The Safety Evaluations Hub will feature ongoing assessments on harmful content avoidance and factual accuracy.

- Initial focus covers harmful content, jailbreak vulnerabilities, hallucination rates, and compliance with instructions.

- OpenAI will align these evaluations with new model releases for continuous safety assurance.

- The platform is part of OpenAI’s response to recent scrutiny and efforts to communicate proactive safety measures.

Google's latest innovation, AlphaEvolve, is setting new benchmarks in solving complex mathematical and computational tasks. This new coding agent leverages Gemini and evolutionary strategies to devise algorithms capable of solving long-standing scientific challenges and enhancing operational efficiencies. The most notable achievement of AlphaEvolve includes surpassing the well-known Strassen's algorithm for matrix multiplication, a feat that underscores its potential to revolutionize fields reliant on mathematical computations.

Key Highlights:

Key Highlights:

- AlphaEvolve synthesizes mixed-mode strategies using Gemini for generating and refining code.

- It achieved breakthroughs on over 50 open mathematical problems, offering improved or novel solutions.

- The model contributes to optimizing Google’s internal processes, including data scheduling and AI training.

- AlphaEvolve's innovations suggest strong capabilities for contributing to chip design improvements.

Anthropic is on the brink of unveiling advanced versions of its AI models, Sonnet and Opus, featuring cutting-edge cognitive abilities and expanded functionality. These updates are notable for their hybrid reasoning and tool usage, allowing the models to self-correct errors autonomously, a significant leap toward creating more sophisticated AI systems. Additionally, the anticipation of a model codenamed Neptune has increased, with safety assessments currently underway to validate its capabilities.

Key Highlights:

Key Highlights:

- Sonnet and Opus models can alternate between reasoning and utilizing tools independently for coding improvements.

- Ability to identify and resolve coding issues without human input marks significant advancement.

- The release is coupled with Anthropic’s new bug bounty to further test and refine model capabilities.

- The Neptune model hints at a significant upgrade, coinciding with a focus on stringent safety evaluations.

🛠️ New AI Tools

- Google Gemini Integrates GitHub Gemini Advanced users can now merge GitHub repositories for efficient code generation and debugging. This integration enhances productivity for developers working within Google's ecosystem.

- TikTok Introduces AI Alive TikTok's AI Alive turns static images into videos, making video creation accessible to 1.8B users. This feature democratizes video content creation without complex tools.

- Meta's Molecular Map for Drug Discovery Meta's open-source model aids in accelerating drug and material discovery. It utilizes extensive computational resources to perform billions of calculations, enhancing research efficiency.

- Gemini AI Assistant Enhances In-Car Experience Google integrates Gemini into cars, offering hands-free operations like translation and navigation. This assistant promotes safer, more productive travel with features like on-the-go brainstorming.

.svg)